Training

Training was performed using the full dataset, with somewhere between 10 and 25 percent of the data being held aside as validation data.

Evaluation data saving was implemented later, with a similarly variable portion of the dataset being held aside for use as evaluation-only data. Both of these served to identify overfitting issues.

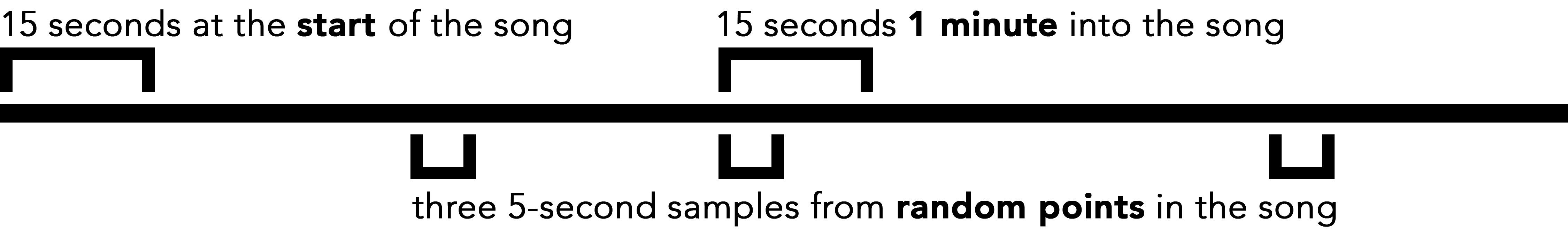

Several varying windows were used: